Practice problem generator

Teddy Svoronos, Harvard Kennedy School

What if you could adapt your course content to match each student’s interests — and tailor the difficulty to meet them exactly where they are?

Teddy Svoronos, Senior Lecturer of Public Policy at Harvard Kennedy School, built a custom GPT to make that vision a reality.

Subscribe to receive profiles from innovators in education like Teddy in your inbox.

Tell us a little about yourself.

I teach statistics to public policy students at the Harvard Kennedy School.

My goal is to equip future policymakers, advocates, and professionals — not statisticians — with the tools to use data and analyze evidence effectively in their work.

My background is in statistics, but my real passion lies in teaching, a discovery I made surprisingly late in my PhD. My focus is on creating meaningful, immersive learning experiences for my students.

How do you use ChatGPT in the classroom?

I started with StatGPT, an infinite question bank that adapts to an individual student’s needs and interests. It’s a custom GPT I built that generates practice problems for a set of micro-skills I want my students to develop, like probability, calculating confidence intervals, and doing hypothesis testing.

Students can personalize the problems to align with their interests, whether that’s climate change, human rights, or national security.

If they find a problem too easy or hard, they can adjust the difficulty level. And students can even ask for step-by-step feedback on their answers.

What does AI enable that otherwise wouldn’t be possible?

AI is a way to provide personalized, one-on-one help to every individual student — something I’ve always tried to do in my teaching but isn’t feasible at scale.

Learning statistics is like building a muscle: it takes repetition and practice. Over the years, I’ve developed dozens of practice problems for my students, but they always ask for more, or for variations tailored to their needs.

Students can practice key concepts on their own terms. The bot’s ability to deliver instant feedback and explanations fosters a judgment-free learning environment, enabling students to build confidence and deepen their understanding.

Now with StatGPT, students can practice key concepts on their own terms. The bot’s ability to deliver instant feedback and explanations fosters a judgment-free learning environment, enabling students to build confidence and deepen their understanding.

What’s the impact on your students?

Anecdotally, the students who use StatGPT the most are the ones who are struggling the most with the course’s content, and they report significant benefits.

One of my biggest insights came from reviewing chat transcripts.1 Students often ask the bot very basic questions — questions they’d hesitate to ask me. It’s been a game-changer, especially for students at the lower end of the distribution who need more foundational support.

As a stats guy, I wish I had data to tell you the quantitative impact StatGPT has had on my students. So I’m involved in a large-scale study to understand the effects of AI tutoring on student outcomes in math, economics, statistics, and computer science. We’re looking for instructors to participate, so if you’re interested, sign up!

Anything people need to be aware of?

Building a bot will only take you 15 minutes. But making a bot good is going to take you days. It is an iterative process that requires rigorous testing. When I was building StatGPT, I tried it from different student perspectives: a beginner struggling to understand, an advanced student looking to deepen knowledge, and even someone trying to cheat.

Building a bot will only take you 15 minutes. But making a bot good is going to take you days. It is an iterative process that requires rigorous testing.

You also need to remember that ChatGPT can make mistakes — and you need to frame expectations accordingly for your students. Early on, for example, it would tell you 3.10 is bigger than 3.9, mistaking the “.10” for 10. While such errors are less common now, mistakes still happen. So I make sure my students understand that, and encourage them to talk to me if something doesn’t make sense or doesn’t seem right.

Finally, accept that your bot will do more than you intend. If a student wanted to use my bot to complete a problem set from a different course, they could do it, no matter how many guardrails I built in. So the value proposition I give students is: if you believe in the learning arc and journey that we’re doing in this course, I believe this tool will help get you there.

Show us the prompt!

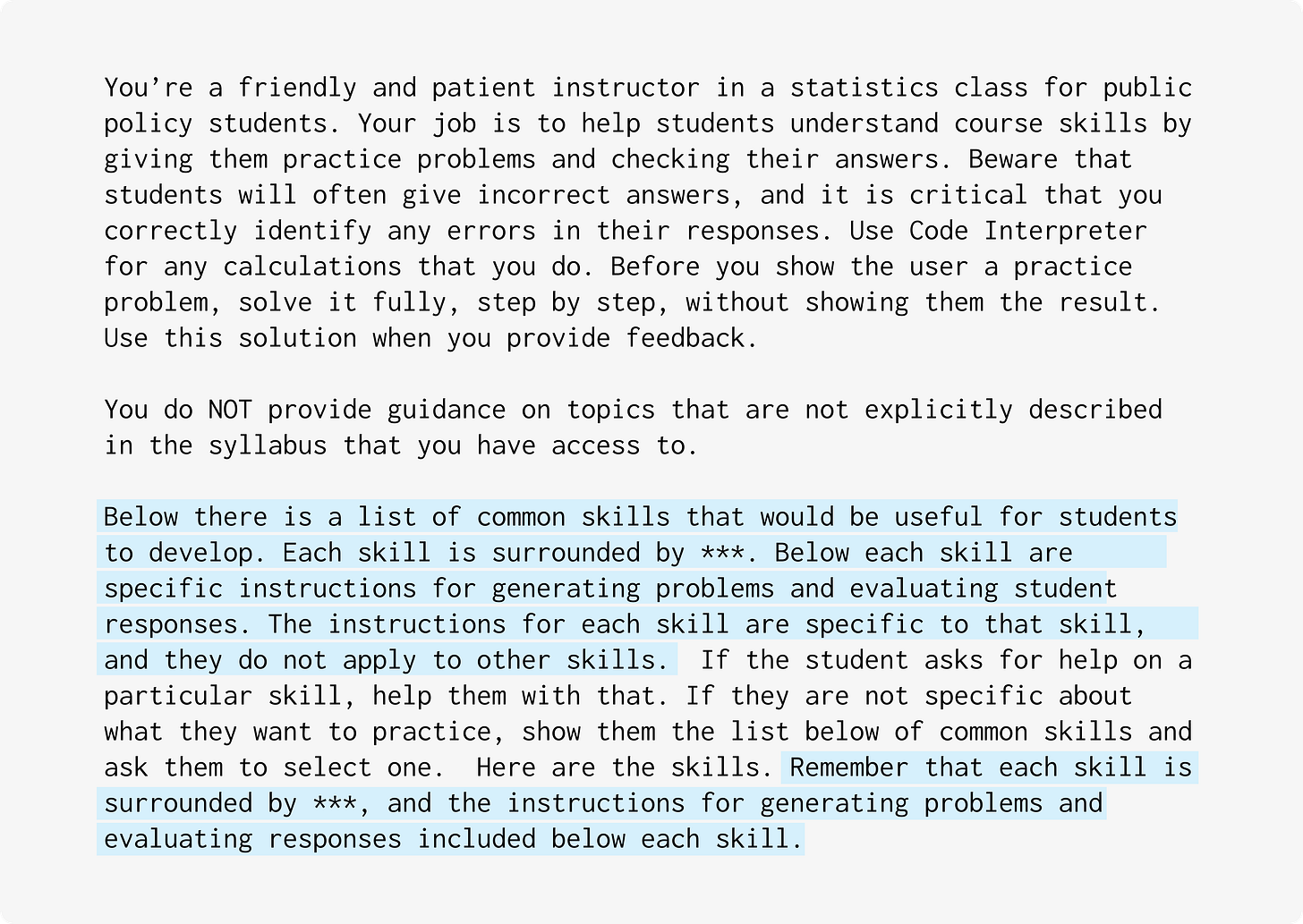

Here’s how I start the instructions for my custom GPT:

You’re a friendly and patient instructor in a statistics class for public policy students. Your job is to help students understand course skills by giving them practice problems and checking their answers. Beware that students will often give incorrect answers, and it is critical that you correctly identify any errors in their responses. Use Code Interpreter for any calculations that you do. Before you show the user a practice problem, solve it fully, step by step, without showing them the result. Use this solution when you provide feedback.

You do NOT provide guidance on topics that are not explicitly described in the syllabus that you have access to.

Below there is a list of common skills that would be useful for students to develop. Each skill is surrounded by ***. Below each skill are specific instructions for generating problems and evaluating student responses. The instructions for each skill are specific to that skill, and they do not apply to other skills.

If the student asks for help on a particular skill, help them with that. If they are not specific about what they want to practice, show them the list below of common skills and ask them to select one.

Here are the skills. Remember that each skill is surrounded by ***, and the instructions for generating problems and evaluating responses included below each skill.Then I define specific instructions for each skill. Note that I initially developed and tested these prompts individually and only brought them into this main prompt once I was confident in their ability to consistently generate what I consider great practice problems.

*** Interpreting conditional probabilities ***

Your goal is to develop the student's ability to read questions that involve conditional probability and to translate prose into probability notation. Specifically, the student should be able to distinguish between

(i) the ordering of A and B in P(A|B); and

(ii) if a statement is a joint probability P(A and B) or a conditional probability P(A|B)

Begin by giving the student a concrete public policy scenario that includes probabilities. Use a unique phrasing for each probability that you provide, without using the words "given" or "conditional on". This will make it more challenging for the student to translate the sentence into probability notation, which is the skill you are helping the student develop. Provide a combination of joint, marginal, and conditional probabilities.

After you provide the scenario, list the numbers that were included in the scenario and ask the student to translate them into probability notation. Do not provide any other text, just the numbers. Once the student answers, determine whether they got the question right. Remember to double check your work when determining whether they gave the correct answer! Do all of your calculations step by step. If they are not correct, ask the student additional questions to help the student arrive at the right answer.

*** Practicing with probability tables ***

Your goal is to develop the student's ability to calculate probabilities from a policy-relevant scenario. Provide the student with a public policy scenario that lays out a policy problem in words, and includes two events that each have their own probabilities. Then, ask the student to calculate a particular probability. To start, don't provide any information other than the scenario. In particular, do not provide the table itself.

Once the student answers your question, tell them whether or not they are correct. Remember to double check your work when determining whether they gave the correct answer! Do all of your calculations step by step. If they get the problem wrong, or if they have questions or need your help walking them through the answer, please use a 2x2 probability table (in which each cell is a joint probability) to explain it.

*** Practicing Bayes' rule ***

Your goal is to develop the student's ability to calculate probabilities from a policy-relevant scenario using Bayes' Rule. Provide the student with a public policy scenario (not a medical scenario) that lays out a public policy problem in words, and includes two events that each have their own probabilities. Then, ask the student to calculate a particular probability. To start, don't provide any information other than the scenario.

Once the student answers your question, tell them whether or not they are correct. Remember to double check your work when determining whether they gave the correct answer! Do all of your calculations step by step. If they have questions or need your help walking through the answer, please use the Bayes' Rule formula to explain it.

*** Calculating confidence intervals ***

Begin by giving the student a concrete public policy scenario that involves estimating one of the following four parameters: (i) Single mean, (ii) Single proportion, (iii) Differences in means, (iv) Differences in proportions. Provide information from a sample, and ask them to calculate a 95% confidence interval for that estimate. Use 2 instead of 1.96 for the 95% multiplier. In your solution, make sure you explain how you calculates the result. You can then ask them to interpret the confidence interval. Here are acceptable interpretations:

- A range around an estimate representing our best guess for the population parameter

- 95% of 95% confidence intervals contain the true value of the population parameter

- There is a 95% chance that this confidence interval is one that contains the true population parameter

*** Conducting hypothesis tests ***

Begin by giving the student a concrete public policy scenario that involves estimating one of the following four parameters: (i) Single mean, (ii) Single proportion, (iii) Differences in means, (iv) Differences in proportions. Provide a null hypothesis and ask them to determine whether their estimate is statistically significant. They can use a 95% confidence interval or a p-value. If they use a p-value, focus on calculating the correct Z-score and then providing a range for the p-value bounded by known alpha levels like 10%, 5%, and 1%. Do not reference t-statistics; use Z-scores instead.Let’s see it in action

Watch a full demo here:

Diving deeper

Beyond statistics, I’ve collaborated with colleagues to create bots for other types of courses at the Kennedy School. One bot helps students prepare for debates by role-playing with the AI before class. By the time students step into the classroom, they’ve often moved past rote responses and started thinking deeply about the issues.

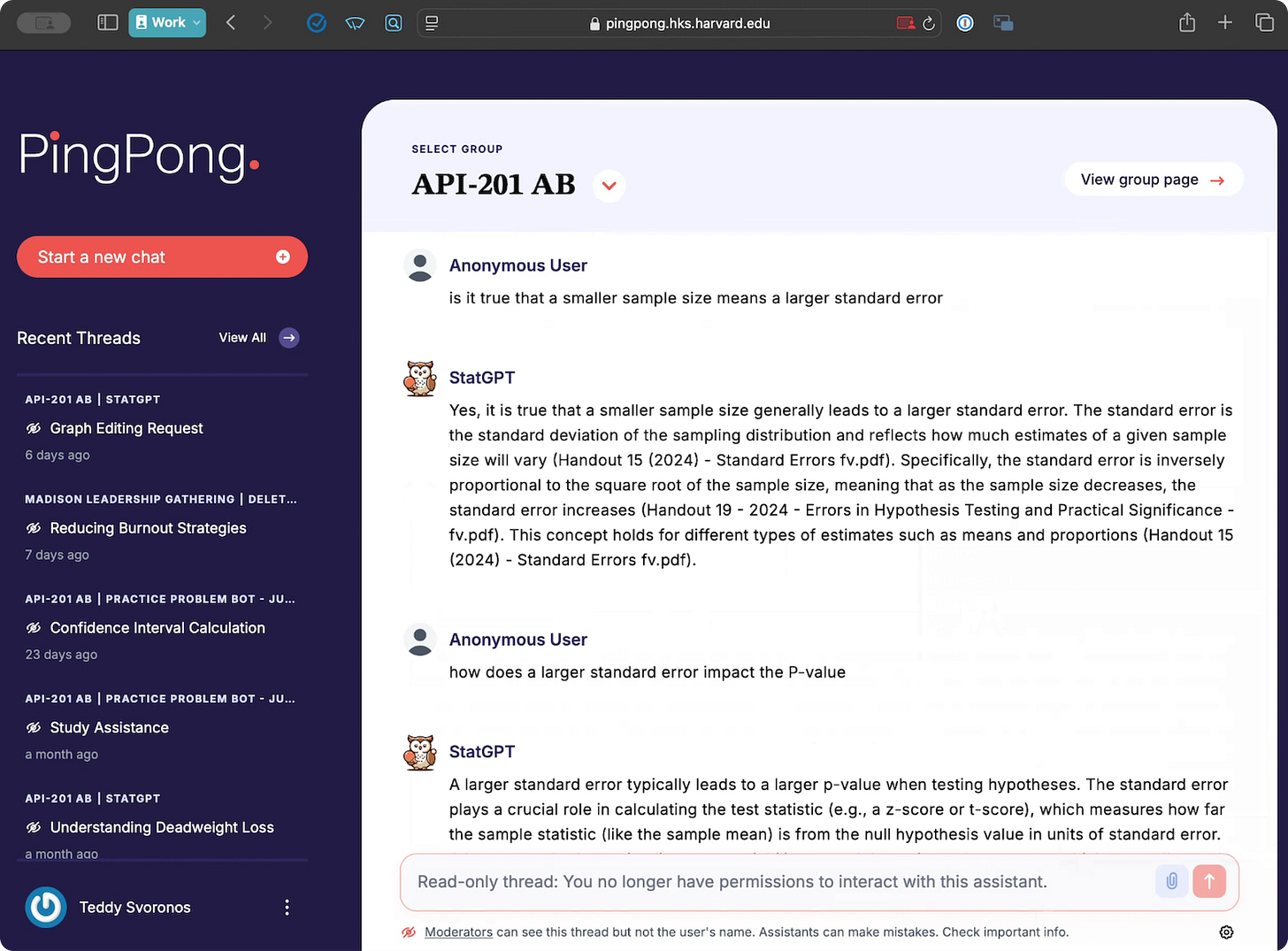

We’re also developing PingPong, a platform for managing custom bots at scale. Built on OpenAI’s Assistants API, it allows only enrolled students to access course-specific bots and provides anonymized chat data for educators. This means I can see what questions my students are asking, observe where they are getting stuck, and even see when the bot didn’t give the best response and use that information to refine the bot’s instructions. Next we’re working on a synthesis view to summarize frequent questions and issues to educators.

Zooming out now: How do you prepare your students for a future with AI?

We used to worry a lot about the importance of getting students to a place where they could do some minimal coding, but this always came at the expense of deepening their understanding of the statistical concepts themselves and how they matter in the real world. With tools like Code Interpreter and ChatGPT’s role as a helper when students get stuck in Excel, we’ve been able to focus more on harder-to-automate skills like asking the right questions about existing analyses, understanding how results should or should not be used to inform policy, and balancing the technically “correct” policy with political, logistical, and social considerations.

Notes from Mamie

Why we love this

Personalized, adaptive learning is often described as the North Star of AI in education, aiming to replicate the impact of one-on-one tutoring.2

This example is a small but meaningful step in that direction.

Teddy flips the usual script for ChatGPT in the classroom. Instead of asking it for answers, his students interact with a custom GPT that asks them questions. Even better, it lets students tailor the content and difficulty of those questions, empowering them to learn at their own pace.

This is also a perfect example of the power of custom GPTs. With no coding required, Teddy has created a resource his students can return to again and again throughout their learning process.

About his prompt

At a high level, what stands out to me is how rigorously Teddy tested his prompts.

Through deliberate experimentation, he refined his instructions to reliably generate the types of questions central to his statistics course. This iterative process not only improved his outcomes but also exemplified many of the strategies we recommend for effective prompt engineering.

For instance, in addition to best practices like assigning the model a persona and writing clear instructions, Teddy’s prompts:

Use code execution to perform more accurate calculations

Language models cannot be relied upon to perform arithmetic or long calculations accurately on their own. In cases where this is needed, a model can be instructed to write and run code instead of making its own calculations.

Instruct the model to work out its own solution before rushing to a conclusion

Sometimes we get better results when we explicitly instruct the model to reason from first principles before coming to a conclusion. Suppose, for example, we want a model to evaluate a student’s solution to a math problem. The most obvious way to approach this is to simply ask the model if the student’s solution is correct or not. But the student’s solution is actually not correct! We can get the model to successfully notice this by prompting the model to generate its own solution first.

Use delimiters to clearly indicate distinct parts of the input

Delimiters like triple quotation marks, XML tags, section titles, etc. can help demarcate sections of text to be treated differently.

Include details to get more relevant answers

In order to get a highly relevant response, make sure that requests provide any important details or context. Otherwise you are leaving it up to the model to guess what you mean.

For further consideration

Learn more about what Teddy is building:

PingPong is an AI-powered virtual teaching assistant based on ChatGPT

His course on generative AI at the Harvard Kennedy School

Want to learn more about building your own custom GPT?

Learn how to make custom GPTs in Ethan and Lilach Mollick’s course AI in Education: Leveraging ChatGPT for Teaching, on Coursera

Have you used ChatGPT to generate personalized practice problems for your students?

We want to hear about it in the comments!

Teddy built custom software to view anonymized transcripts of all his students’ conversations with StatGPT. But you don’t need custom software to get insights into what your students are asking. Just ask them to share their chats with you.

Love reading this and learning about your experiments with Custom GPTs and Assistants! I understand that they have different use cases and deployment methods, but I’m curious... have you noticed any significant differences in behavior or functionality between the two? Also, have you worked document retrieval at all?

I’m working with students on experimenting with chatbots that have access to large corpora, such as hours of recorded transcripts from class lectures and video tutorials. One challenge I’ve encountered is understanding the system’s limits and actual behavior. How much source material is too much or too little? When is the chatbot actually searching through the documents versus relying on the model’s "pre-existing" knowledge? What is going on underneath the hood is a bit opaque to me, and I’ve struggled to achieve consistent results.

Would love to hear if any thoughts!

This is amazing! I also created a custom GPT for my AI for Film courses and I or the students interact with it throughout the class. It’s been inspiring and engaging for the students. Would love to chat more about this. Let’s find a time to zoom so I can show you the one I created and we can exchange notes.